7 top things to know about Amazon S3

AWS S3 is a highly reliable and scalable cloud storage service that allows you to store any amount of data for as long as you want. S3 offers simple APIs for managing your content and a rich set of features to meet your different business needs, but it can require substantial time and effort to manage properly. This article outlines best practices to help you manage your S3 buckets and its objects effectively and ensure they perform as expected.

Which storage class to use for your workloads?

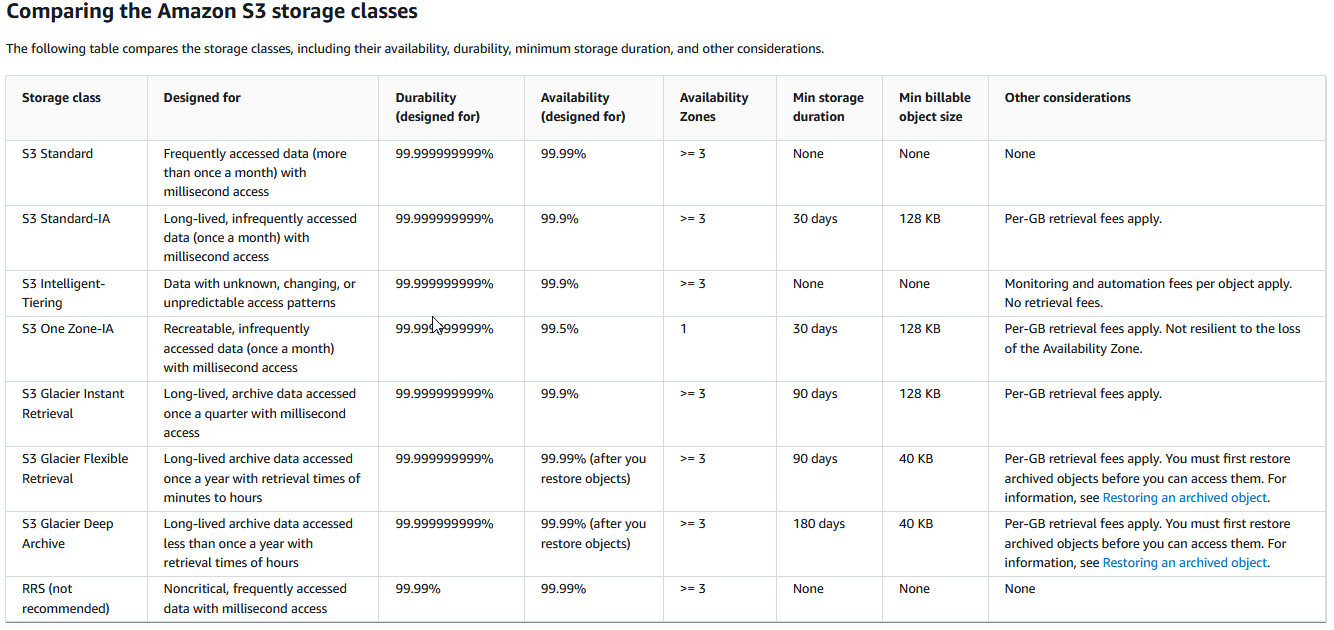

Amazon Simple Storage Service (S3) provides a range of storage classes designed to store different types of data at different cost and performance levels. The available storage classes are:

- Standard: The default storage class for S3, designed for storing data that is frequently accessed and retrieved.

- Standard-Infrequent Access (Standard-IA): A lower-cost storage class for data that is not accessed as frequently but still requires rapid access when needed.

- One Zone-Infrequent Access (One Zone-IA): A lower-cost version of Standard-IA that stores data in a single availability zone, with a slightly higher risk of data loss in the event of an outage in that availability zone.

- Reduced Redundancy Storage (RRS): A lower-cost storage class for easily reproducible data, such as thumbnails or transcoded video files. RRS stores data with less redundancy than Standard storage, resulting in a lower price point.

- Intelligent-Tiering: A storage class that automatically tiers data based on usage patterns, moving data to the most cost-effective storage tier without any additional effort on the user’s part.

- S3 Glacier: The lowest-cost storage class for S3, designed for storing rarely accessed data and for which several hours’ retrieval times are acceptable. There are three types of S3 Glacier storage class, provides different retrieval time.

You can choose the storage class that best fits the needs of your data based on the access patterns and retrieval times required for your application.

Protect data using S3 encryption

You have several options for encrypting data in Amazon S3:

- SSE-S3 (Server-Side Encryption with Amazon S3-Managed Keys): This is the default encryption option for S3. It automatically encrypts objects when they are stored and decrypts them when retrieved. Amazon S3 manages the keys used for encryption and decryption.

- SSE-KMS (Server-Side Encryption with AWS KMS-Managed Keys): This option uses keys that are managed by the AWS Key Management Service (KMS) to encrypt and decrypt objects. With this option, you have additional control over the keys and can use key policies to manage access to them.

- SSE-C (Server-Side Encryption with Customer-Provided Keys): This option allows you to provide your own keys for encryption and decryption. You are responsible for securely managing the keys and ensuring that keys are not lost or compromised.

- Client-Side Encryption: With this option, you can use the AWS SDKs or the AWS CLI to encrypt data on the client side before uploading it to S3. The encryption keys are managed by you and are never sent to AWS.

You can choose the encryption option that best meets your security and compliance requirements. For more information, see the Amazon S3 documentation on encryption.

Enable S3 event notifications to track the actions on your bucket and its objects

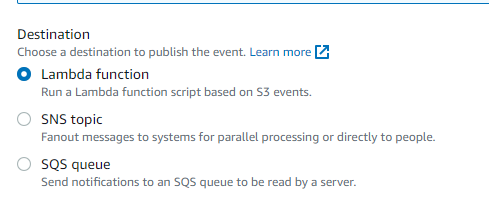

One of the features of S3 is the ability to send event notifications when specific actions occur in your bucket. S3 event notifications triggers a variety of actions in response to particular events, such as:

- Sending a notification to SQS queue to be read by a server, when a object is created or deleted.

- Sending a message to an Amazon Simple Notification Service (SNS) topic when an object is created or deleted.

- Invoking an AWS Lambda function when an object is created or deleted.

You might want to use S3 event notifications in the following scenarios or specific business use case:

- Automating data processing workflows: You can use S3 event notifications to trigger a Lambda function to process data when it is added to a bucket. This can be useful for automating data pipelines, data transformations, or data analysis tasks.

- Monitoring bucket activity: You can use S3 event notifications to send an email or an SNS message when specific actions occur in a bucket, such as when an object “is deleted” or access to an object “is denied.” This can help you track your bucket’s activity and identify any potential issues.

- Syncing data between buckets: You can use S3 event notifications to automatically copy objects between buckets in different regions or accounts when certain events occur. This can be beneficial for maintaining redundant copies of data for disaster recovery or replicating data between environments.

- Automating image or video processing: You can use S3 event notifications to trigger a Lambda function to process images or videos when they are added to a bucket. This can be useful for resizing images, creating thumbnails, or transcoding video files.

Overall, S3 event notifications are a valuable tool for automating workflows and monitoring activity in your S3 buckets. They can help you build scalable and reliable systems that process and store large amounts of data.

Use S3 Object lock to protect your critical data from accidental deletion or overwriting

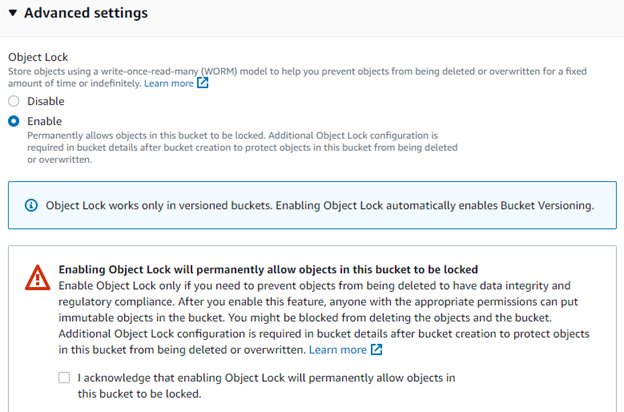

Amazon S3 Object Lock is a feature of Amazon S3 that lets you store objects using a write-once-read-many (WORM) model. You can use WORM protection for scenarios where it is imperative that data is not changed or deleted after it has been written.

“Object Lock” is used in various scenarios where it is essential to prevent accidental or unauthorized deletion or overwriting of objects, such as in regulated industries or when storing critical business data.

Some specific use cases for Object Lock include:

- Compliance: Object Lock can be used to meet regulatory requirements for data retention, such as the SEC Rule 17a-4 and FINRA Rule 4511, which require certain financial records to be kept for a specific period.

- Data archiving: Object Lock can be applied to store data that needs to be kept for long periods, such as data required for legal or compliance purposes.

- Data protection: Object Lock can protect critical data from being deleted or overwritten, such as backups or disaster recovery data.

You can enable Object Lock on individual objects or on a bucket, which will apply Object Lock to all objects stored in the bucket. As of now, you can enable Object Lock during the creation time of the bucket only.

Enable S3 Versioning for data protection and team collaboration

S3 Versioning is a feature that allows you to keep multiple versions of an object in your bucket. With versioning, you can preserve, retrieve, and restore every version of every object in your Amazon S3 bucket.

Versioning provides two key benefits:

- It helps you recover from accidental deletions or overwrites by maintaining a history of changes to your objects.

- It enables you to implement change detection processes that run against your entire set of objects (e.g., scanning all files and comparing them against previous versions).

Some use cases for S3 versioning include:

- Data protection: Versioning can protect your data from being lost or accidentally deleted. It ensures that you have a historical record of all versions of your objects, so you can easily recover from mistakes or data loss.

- Data backup: You can use versioning as a backup mechanism to protect your data from data corruption, hardware failures, or other disasters.

- Collaboration: Versioning can help teams collaborate on projects by allowing multiple users to make changes to the same object. You can easily track changes and revert to a previous version if needed.

- Auditing and compliance: Versioning can help you meet regulatory and compliance requirements by providing a record of all changes to your data. This can be useful for auditing purposes or for demonstrating compliance with data protection regulations.

S3 versioning is a valuable feature for protecting and managing data in the cloud. It provides a simple and reliable way to preserve, retrieve, and restore all versions of your objects, making it easier to protect your data and ensure business continuity.

Reduce operational overhead with the Lifecycle management feature of S3.

Amazon S3 Lifecycle Management is a feature of Amazon Simple Storage Service (S3) that enables you to automatically transition objects stored in your S3 buckets to different storage classes or delete them entirely based on the rules you define. The purpose of S3 Lifecycle Management is to help you reduce storage costs and improve the performance of your applications by storing objects in the most appropriate storage class for their needs.

There are several use cases for S3 Lifecycle Management:

- Archiving: You can use S3 Lifecycle Management to automatically transition objects to the S3 Standard-Infrequent Access (S3 Standard-IA) or S3 One Zone-Infrequent Access (S3 One Zone-IA) storage classes after a certain number of days or to the S3 Glacier storage class after an even more extended period. This can be useful for storing infrequently accessed data but must retain for compliance or other purposes.

- Data backup: You can use S3 Lifecycle Management to automatically transition objects to the S3 Standard-IA or S3 One Zone-IA storage classes after a certain number of days or the S3 Glacier storage class after an even longer period. This can be useful for storing data that is not actively being used, but still needs to be retained as a backup.

- Data tiering: You can use S3 Lifecycle Management to automatically transition objects to different storage classes based on their access patterns. For example, you can store frequently accessed objects in the S3 Standard storage class and transition less frequently accessed objects to the S3 Standard-IA or S3 One Zone-IA storage classes after a certain number of days.

- Data expiration: You can use S3 Lifecycle Management to automatically delete objects that are not after a certain number of days. This can be useful for managing the Lifecycle of temporary data, such as logs or cache files.

Generally, S3 Lifecycle Management is a valuable tool for managing the Lifecycle of your data in S3 and reducing storage costs by storing objects in the most appropriate storage class for their needs.

Query data with AWS S3 Select and save $$$

Amazon S3 Select enables you to retrieve a subset of data from an Amazon S3 object. By using Amazon S3 Select to filter this data, you can reduce the amount of data that Amazon S3 transfers, which reduces the cost and latency to retrieve this data. S3 Select is useful for scenarios where you want to retrieve and process only a small amount of data from a large object. For example, you can use S3 Select to filter, transform, and reduce the size of data that you retrieve from an S3 object before sending it to your application.

Some use cases for S3 Select include:

- Extracting data from a large data set stored in S3 for further processing or analysis.

- Filtering data stored in S3 before loading it into a database or data warehouse.

- Transforming data stored in S3 before sending it to a downstream application or service.

To use S3 Select, you can use the S3 API or one of the AWS SDKs. You can also use S3 Select with AWS Lambda to process data stored in S3 as part of a serverless application.

Conclusion

Amazon S3 is a powerful and convenient storage service, but like any technology, it requires careful planning and maintenance to ensure it is used effectively and securely. It’s essential to understand the capabilities and limitations of the service, as well as best practices for organizing and securing your data.

One key aspect of maintaining your S3 buckets and objects is regularly reviewing and managing access permissions. It’s important to control who has access to your data carefully and to check and revoke access as needed periodically.

In addition to managing access, it’s also essential to consider your data’s security at rest, including encrypting data when it is stored in S3 and implementing other security measures such as bucket policies and access control lists.

Finally, it’s important to regularly monitor and optimize the performance of your S3 buckets and objects. Which includes optimizing the size and number of objects in your buckets, using appropriate storage classes for different types of data, and using tools like Amazon S3 Transfer Acceleration to improve upload and download speeds.

By following these best practices and regularly reviewing and optimizing your S3 usage, you can ensure that your data is secure, efficient, and well-organized in Amazon S3.